Thinking about agents: enablement and over-reliance

AI is fantastic when we can't do a task.

But what about when we can, and we simply choose not to, out of laziness, pressure to be more productive, or competition?

If you're like me, you're being bombarded with people using AI to do cool things like build apps for themselves or automate their lives. Even Andrei Karpathy has hopped onto the vibe-coding trend, and he also uses LLMs every day.

It's thrilling to offload our daily drudgery onto tools that can do it all for us. More and more systems promise to automatically write our emails, manage our social media, read our documents, and help us code. When AI genuinely succeeds in saving us hours of sweat every week, it can be hard not to fall in love.

But I've been wrestling with a question: Just because we can offload so many tasks, does that mean we should?

Enablement

After a decade working in AI, I stepped away from my role building a platform for fine-tuning LLMs to take a break and work on projects I'd long postponed. With AI lowering the barriers to making rapid meaningful progress, these projects finally felt within reach.

For example, the paper we published using a decentralized council of LLMs to self-rank would have taken my last research team at Google several months just to build the experimentation harness, another several months for experiments, and weeks more to visualize results and write the paper. Yet with AI, our tiny team of mostly independent researchers produced a paper accepted to ACL — most of the work done in just a few weeks.

AI has grown and changed so much since I started in 2014. The days of researchers lamenting that LLMs are stochastic parrots are numbered. People in my network with minimal coding experience are using AI to build real tools aligned with their interests. For example, my Product Lead friend with near-zero SWE experience has built multiple tools aligned with his personal passions (e.g. meditation coaching app Apollo), and my partner who has never written a single line of code in his life built a patient-staff scheduling tool for his medical team at Stanford hospital. These tools are simple, but there's a lot of low-hanging fruit like this that can have a transformative impact on organizations. No SWEs needed, no fine-tuning, just use your words.

Projects that seem daunting are suddenly accessible, not just for AI specialists but for nearly anyone with an idea. Earlier this week, I made an app that transcribes, diarizes, and summarizes YouTube and TikTok videos, from start to finish, in 20 minutes.

It's not just apps that are accelerating due to AI – AI is also revolutionizing data analysis and research. Today, more than 21 AI research papers are published on Hugging Face every day on average, more than double from a year ago. Source.

If LLMs were already game-changers, LLM agents (LLMs that can semi-autonomously execute on ambiguous tasks) push the boundaries further. Some of my favorite demos:

OpenAI's DeepResearch (as well as versions from HF, You, Jina, Gemini, comparison)

Tutorials from stephengpope@ using tools like n8n and make

This past quarter has seen a deluge of agent-related releases — OpenAI's Agents SDK, Anthropic's MCP, Manus, just to name a few). Each new agentic system promises to enable LLMs to handle tasks both tedious and complex with increasingly minimal human input.

When you spend enough time with these tools, you see their limits, but you also see amazing capabilities, and it's hard to not get excited about what capabilities are coming in the future.

In Sam Altman's recent essay "Three Observations", he makes a bold prediction that "In a decade, perhaps everyone on earth will be capable of accomplishing more than the most impactful person can today". It's optimistic, but at the pace we're going, it feels within reach.

Claude Plays Pokemon. Does beating the game mean we've reached AGI?

Over-reliance

AI seems genuinely fantastic when we truly can't do a task. But what about when we can, and we simply choose not to, out of laziness, pressure to be more productive, or competition?

Research has long shown that when we stop doing something ourselves, those abilities tend to atrophy. For example, it's not uncommon for engineering managers who haven't coded in years to struggle to find new jobs after they become managers. Research also suggests reliance on technology can lead to cognitive skill erosion.

Ophir, Nass, and Wagner (2009) studied multitasking and found that over-reliance on digital tools can weaken focus and deep learning.

Clements et al. (2011) argues that while calculators can be helpful, they should be introduced only after conceptual mathematical understanding is solid.

Ishikawa et al. (2008) found that people who use GPS frequently develop worse spatial awareness and topographical memory and often lose their intuitive sense of spatial relationships.

Barr et al. (2015) showed that frequent smartphone users demonstrate lower analytical thinking because they rely on quick lookups instead of deep reasoning.

Manu Kapur (2016) suggests that struggling with problems before receiving instruction leads to deeper learning and retention.

Bjork and Bjork (2011) argue that learning should involve challenges and setbacks because effortful retrieval strengthens memory and understanding. If things are too easy, deeper comprehension is hindered.

Every time we ask AI to write code for something instead of doing it ourselves, we may be weakening the neural "muscles" we once used for those tasks.

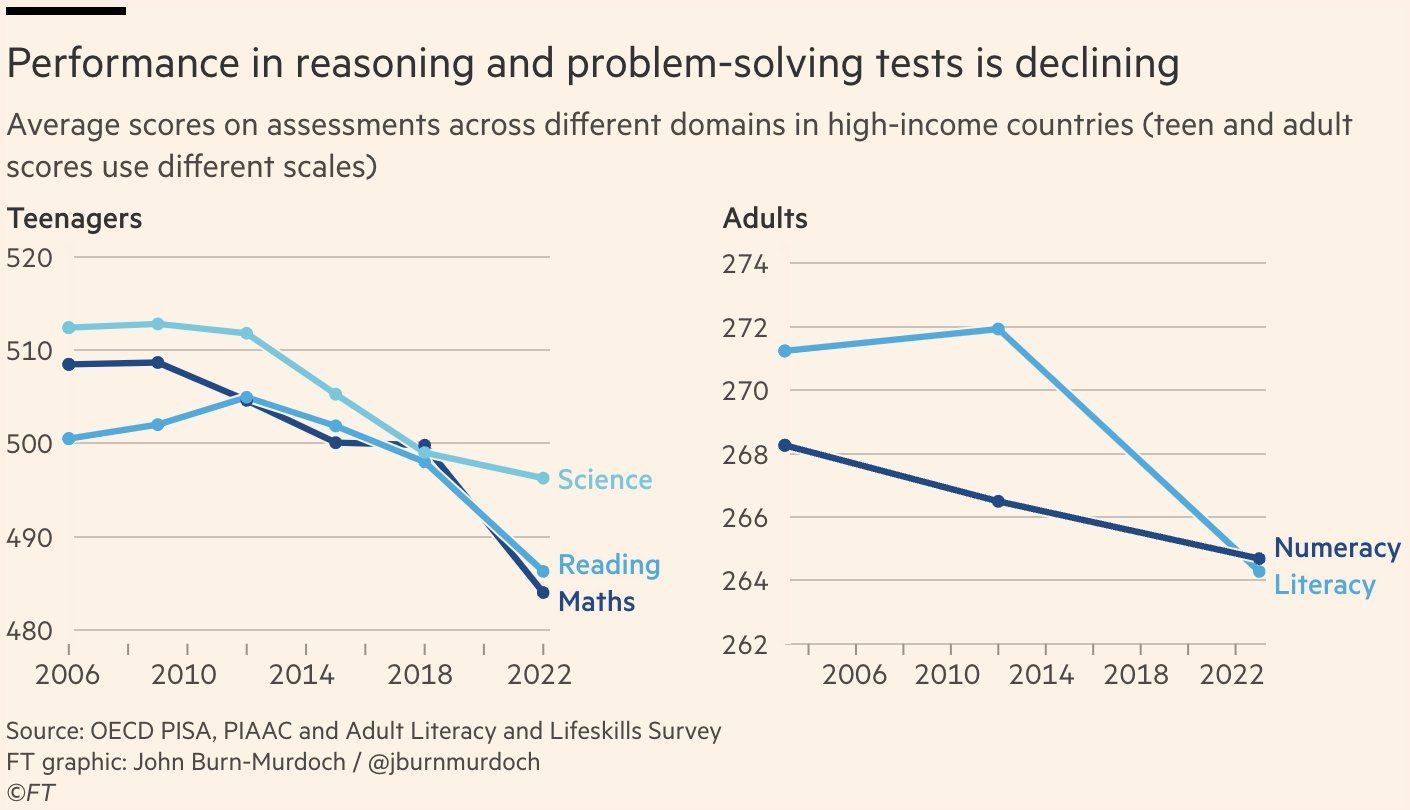

The Financial Times reports that average information processing, reasoning, and problem-solving capabilities have been declining since the mid-2010s.

Nobody would argue that the fundamental biology of the human brain has changed in that time span. But there is growing evidence that the extent to which people can practically apply that capacity has been diminishing. Is this just a bi-product of exponential technological progress? What happens to our brains in a world where AI becomes our generalized calculator for everything?

‘Brain rot’ (Oxford's word of the year in 2024) is defined as “the supposed deterioration of a person’s mental or intellectual state, especially viewed as the result of overconsumption of material (now particularly online content) considered to be trivial or unchallenging."

What happens to our brains in a world where AGI makes everything trivial?

Brain Rot or Just Natural Progress?

"Brain rot" sounds negative, implying AI weakens us. But history contains countless examples of cognitive offloading that proved beneficial:

No one laments our inability to do long division mentally now that we have calculators

Cursive writing was once essential; now it's decorative

People used to memorize phone numbers; now they don't need to

Not all cognitive decline is problematic. Cognitive offloading can be positive when it redirects effort toward more meaningful work.

The key distinction is whether we're losing something valuable.

If AI fully automates email writing and calendar management, are we losing anything essential? Probably not.

If AI automates creative writing, argumentation, or complex decision-making, does that degrade human abilities that do matter?

We should also consider robustness to AI failure. If systems fail or go offline or if they can't be controlled and people can't function without them, that's a genuine vulnerability.

We see a parallel happening in the world of LLM evals, too. Even though the state of the art for most popular AI tasks in 2017 are still specialized neural networks and not LLMs, no one seems to care. The once industry-obsessed Hugging Face Open LLM leaderboard is being retired because it "is slowly becoming obsolete; we feel it could encourage people to hill climb in irrelevant directions."

When is Over-Reliance Okay?

Over-reliance is acceptable when the skill is no longer relevant or when AI failure wouldn't be catastrophic. But it becomes problematic when:

The skill is foundational for higher-level reasoning (e.g., critical thinking, creativity) – atrophy.

AI failure would leave us helpless (e.g., doctors losing diagnostic skills) – fragility.

It limits our ability to recognize when AI is wrong – vulnerability.

AI and Technical Interviews

@im_roy_lee, creator of Interviewcoder, which provides a translucent overlay on your screen that's invisible to Zoom screen sharing where an LLM can look at your screen, listen to your interview, and give you answers on the fly.

Ask any experienced engineer about technical interviews, and you'll likely get an eye roll. The industry has long debated LeetCode-style interviews — algorithmic whiteboarding under pressure. They provide standardized filtering but feel disconnected from real-world engineering, favoring those who grind problems over those with practical experience.

At my last company, we opted for interviews centered around pair programming — collaborative exercises that gave clearer pictures of how candidates actually think and problem-solve in realistic scenarios. Yet even at senior/staff levels in FAANG, LeetCode-style formats persist because, despite their flaws, they seem to correlate with something useful, for now.

InterviewCoder – an invisible AI for technical interviews – was created by a single 20-year-old college student from Columbia University with a single purpose to hack the technical interview process. Despite broad denouncement from the industry criticizing the tool as one that empowers cheating, leading to Roy's own pending suspension from Columbia University, the service has allegedly hit $1M ARR in just 36 days.

The premise for tools like InterviewCoder is understandable: If you view these interviews as arbitrary gatekeeping, especially when all SWEs use some form of AI to write code on the actual job – why not use AI to level the playing field during the interview?

AI-assisted coding makes you feel stronger in the moment, but overuse may quietly erode your problem-solving abilities. You might ace an interview with AI assistance, but what happens when facing an ambiguous, high-stakes engineering problem without your AI crutch?

It's easy to criticize LeetCode interviews. But they do force development of skills companies still value — structured problem-solving, algorithmic fluency, clear communication. AI-assisted interviewing blurs the line: If everyone uses these tools, do technical interviews still test anything meaningful? Or do they become an AI-assistance arms race?

I don't have a definitive stance on whether tools like InterviewCoder are good or bad. But they act as a forcing function to clarify paradoxes in our processes and reflect on how we conceptualize competence in an AI-augmented world.

At the end of the day, I'll still grind some LeetCode problems the old-fashioned way. Not because I love it, but because the ability to struggle without AI remains valuable. Maybe not forever, but for now.

A Different Kind of Agent

At the end of February, I registered for the Elevenlabs Worldwide "AI Agents" hackathon with a few friends in New York who I've long wanted to hack with.

When we think of agents, we often imagine highly active entities, but my hackathon teammates and I wondered if an agent could be something very different: What if an agent could be intentionally quiet? Instead of trying to run your life or solve tasks end-to-end, what if it only stepped in when absolutely necessary?

Imagine a different kind of “agent” that is almost always quiet. It listens thoughtfully, as your quiet companion in conversations, offering gentle yet precise fact-checking but only when a significant discrepancy arises.

That's how we built Cue — a real-time fact-checking AI that listens to conversations but remains silent most of the time. It only speaks up if it detects a factual statement so off-base that it could derail discussion if uncorrected. No unsolicited suggestions, no micro-corrections, no overshadowing natural interaction.

We wanted to explore a different relationship with AI—one where technology is practically invisible unless something truly consequential might slip by. In other words, an AI that actively respects human agency, allowing us to brainstorm, hypothesize, and even be wrong without constant correction. Because part of being human is figuring things out through trial and error.

This stems from the same idea behind letting students struggle with problems before tutors intervene. If tutors do all the heavy lifting, students learn less. Likewise, if AI agents are always in the driver's seat — dictating decisions, generating every email, completing every project — when do we build or refine our own thought processes?

AI is an incredible tool. But the more we rely on it for everything, the less we hone our ability to handle complexity or creative challenges. When AI eventually fails or gives incorrect answers, we may lack the instincts to spot errors.

Cue doesn't solve this conundrum — it's a small demonstration of an alternative path, a reminder that agents can be designed to stay in the background. They don't have to replace us; they can watch, wait, and offer nudges only when genuinely needed.

Building Cue taught us about calibration difficulties: intervene too frequently, and you foster dependency; too rarely, and the system becomes pointless. But we showed it's possible to create agents that respect our messy, flawed human way of learning rather than overshadowing it.

Hacking on Cue at the end of my time in NYC.

Final Thoughts

It's not going to be a question of what AI is capable of — it's what we want AI to be in our lives. We're at a juncture where AI's abilities are expanding rapidly, and it's tempting to let them run large parts of our personal and professional lives.

I'm not advocating blocking progress. But maybe we should each consider our relationship with these increasingly competent systems. Are we using them to complement and challenge us — or in ways that slowly erode our capacity to think, create, and be human?

Personal Note

Building Cue was one of my last projects before making the move from NYC to the Bay Area last week.

It’s been rewarding to reflect on how much has changed since I last called California home, and it feels great to return to familiar territory. The side-project list continues to grow, but I'm also beginning to explore what's next professionally. If you're hiring, I'd be delighted to connect.

P.S. If you're in the Bay Area and want to catch up, please reach out — would love to hear from you.